To integrate LlamaIndex with Llama 2, you can follow these steps: 1. First, make sure that you have installed the necessary dependencies for both LlamaIndex and Llama 2. This may include installing Python packages such as TensorFlow or PyTorch, as well as any other required libraries. 2. Next, import the necessary modules from each library in your code. For example, you can import the `llamaindex` module from LlamaIndex and the `llama_2` module from Llama 2. 3. Once you have imported the necessary modules, you can use LlamaIndex to interact with the Llama 2 model. This may involve calling functions such as `llamaindex.run()` or `llamaindex.predict()`, which will allow you to pass in a input tensor and receive an output tensor from the Llama 2 model. 4

Meta And Microsoft Release Llama 2 Free For Commercial Use And Research

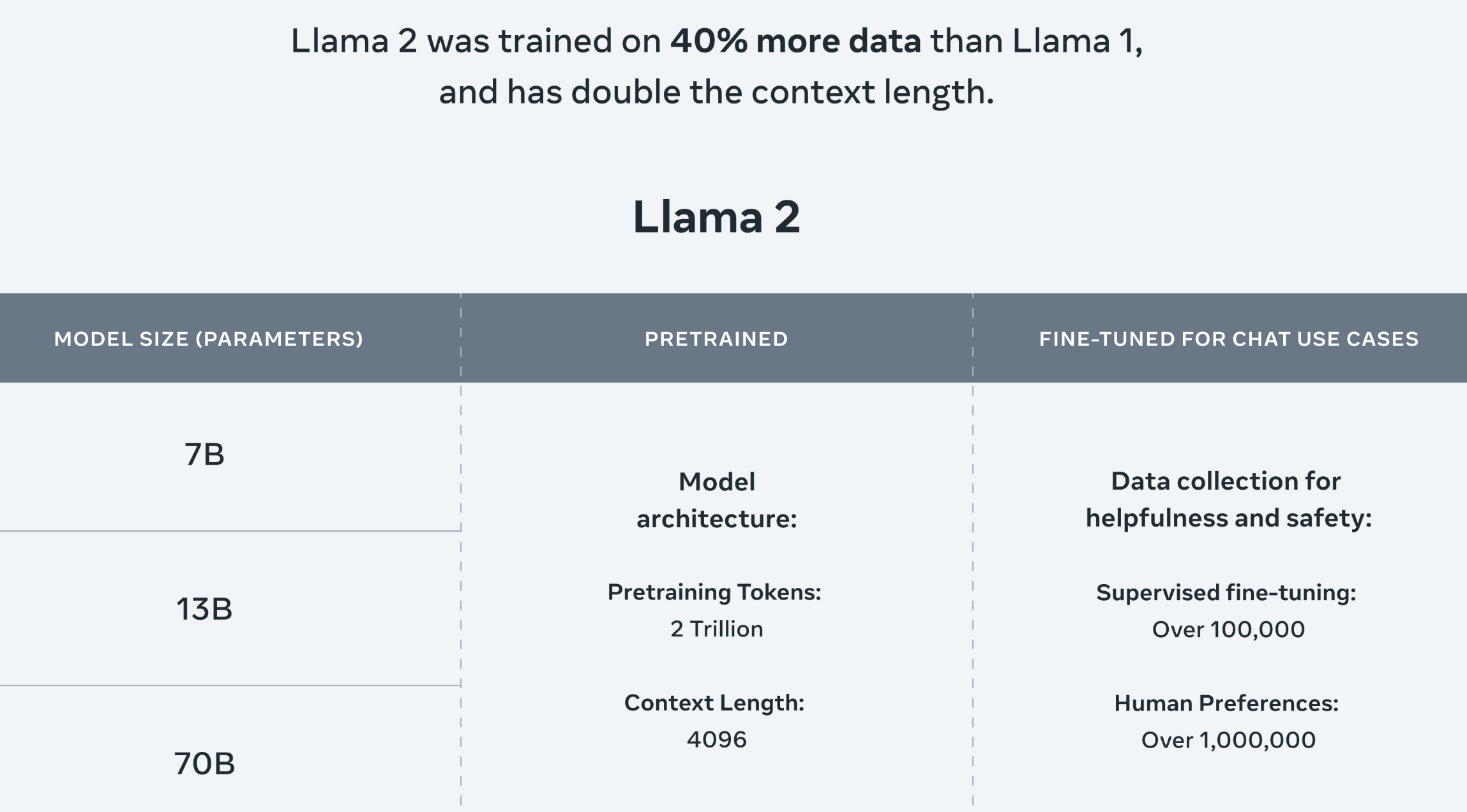

Agreement means the terms and conditions for use reproduction distribution and. Llama 2 The next generation of our open source large language model available for free for research and commercial use. Llama 2 is a large language AI model capable of generating text and code in response to prompts You are agreeing to the LLama 2 terms and conditions of the license. Companies that are looking to use Llama 2 for applications that support more than 700 million monthly active users must request a special license to use Metas technology. About Large language model Llama 2 Open source free for research and commercial use Were unlocking the power of these large language models Our latest version of Llama Llama 2..

Description This repo contains GGUF format model files for Meta Llama 2s Llama 2 70B Chat. Description This repo contains GPTQ model files for Meta Llama 2s Llama 2 70B Chat. Our fine-tuned LLMs called Llama-2-Chat are optimized for dialogue use cases. Fine-tuned Llama-2 70B with an uncensoredunfiltered Wizard-Vicuna conversation dataset. Execute the following command to launch the model remember to replace. There is no way to run a Llama-2-70B chat model entirely on an 8 GB GPU alone. . This is an implementation of the TheBlokeLlama-2-70b-Chat. Ive tested this with 70B base model TheBlokeLlama-2-70B-GPTQ 70B chat model. Is there an uncensored version of llama 2 chat 70b Question Help As per the title. However their service was frequently returning CUDA error device-side assert triggered. It is possible to fine-tune meaning LoRA or QLoRA methods even a non quantized model on a RTX 3090 or 4090 up to. The GPTQ links for LLaMA-2 are in the wiki. A simple repo for fine-tuning LLMs with both GPTQ and bitsandbytes quantization. We will try to replicate the same with meta-llamaLlama-2-70b-chat-hf model when we are granted. Description This repo contains GGML format model files for Meta Llama 2s Llama 2 70B Chat. Description This repo contains GGML format model files for Meta Llama 2s Llama 2 7B Chat. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70. ExLlama has now been updated to support Llama 2 70B Make sure youre using the latest version of. TheBlokeLlama-2-7b does not appear to have a file named pytorch_modelbin tf_modelh5. TheBloke had two 70B versions with no files uploaded yet but when you click on the page now it 404s Im guessing that..

Llama 2 Is Not Open Source Digital Watch Observatory

To integrate LlamaIndex with Llama 2, you can follow these steps: 1. First, make sure that you have installed the necessary dependencies for both LlamaIndex and Llama 2. This may include installing Python packages such as TensorFlow or PyTorch, as well as any other required libraries. 2. Next, import the necessary modules from each library in your code. For example, you can import the `llamaindex` module from LlamaIndex and the `llama_2` module from Llama 2. 3. Once you have imported the necessary modules, you can use LlamaIndex to interact with the Llama 2 model. This may involve calling functions such as `llamaindex.run()` or `llamaindex.predict()`, which will allow you to pass in a input tensor and receive an output tensor from the Llama 2 model. 4

Chat with Llama 2, you can customize the personality of this advanced AI chatbot by clicking on the settings button. With Llama 2, the second-generation Large Language Model from Meta, you have access to even more powerful features and capabilities than before. To experience these new features, simply use the free online coding assistant called Code Llama or try out the open source code models available through Llama 2 Metas. What sets Llama 2 apart is that it's an open-source model, which means you can fine-tune and customize it to your heart's content. In fact, Llama 2 has been shown to outperform other open source language models on various external benchmarks, including reasoning. Whether you're looking for a chatbot that can handle complex tasks or simply want to explore the capabilities of this advanced AI technology, Llama 2 is definitely worth

Komentar